Hidden Markov Modell

Hidden Markov Models (HMM) characterize a two-stage stochastic process. Work on this topic was published by Leonard Baum as early as the mid-sixties. The first stage corresponds to a Markov chain whose states are not visible from the outside (hidden). A second stage generates a stochastic process with so-called observables, the output symbols observable at any time, according to a probability distribution. The goal is now to infer from the sequence of output symbols to the sequence of invisible states.

HMMs are used in the recognition of patterns in sequential data. This can be stored speech sequences in the context of speech recognition or DNA profiles in biology. But also the price behavior at the stock exchange can be modeled as a hidden Markov model (HMM). For this purpose, the hidden states of the Markov chain correspond to semantic units that can ultimately be recognized in the sequential data. This is also referred to as semantic models. A hidden Markov model describes a two-stage stochastic process. The first stage forms a discrete stochastic process, which is described as a sequence of random variables

S=S(1),S(2),...S(T)

can be described. These can take values from a discrete finite state set (i.e., of states).

The process thus describes probabilistic state transitions in a discrete, finite state space. The stochastic process S is:

- Stationary, i.e., independent of (absolute) time t,

- Causal, i.e., the probability distribution of the random variable S(t) depends only on past states, and possibly.

- Simple, i.e. the distribution of St depends only on the immediate previous state - - this then corresponds to a 1st order HMM: .

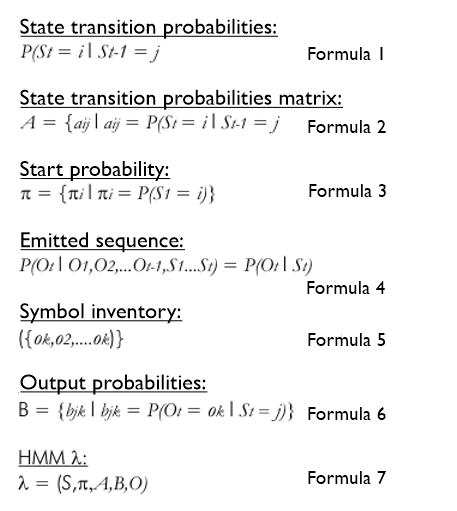

These can be summarized in a state transition probability matrix (formula 2). Initial states are taken according to the starting probabilities (formula 3).

The sequence of states of the chain generated in this way is not observable from outside, i.e. "hidden". What is observable, however, is the sequence of the so-called observations = = O(1),O(2),...O(T), which is generated (emitted) by the stochastic process of the second stage at any time depending on the current state alone according to formula 4.

For discrete HMMs, the observations come from a finite symbol inventory, formula 5. One can then specify the matrix of output probabilities as formula 6.

An HMM (lambda) - which is the usual term in the literature - is therefore fully described by the quintuple in formula 7.

HMMs are used for pattern recognition especially in speech recognition, genetics (DNA patterns), character recognition why especially in Markov filters in the context of spam filters.

.png)

-1-Ordnung.png)